Exaud Blog

Blog

Sonnet 4.5 vs. Opus 4.6 for Agentic Unit Testing

Step inside Exaud’s agentic testing pilot and discover how Anthropic’s Sonnet 4.5 and Opus 4.6 performed under real benchmarking conditions.Posted onby Georg TubalevAs we integrate more agentic workflows into our development processes at Exaud, evaluating the underlying models becomes critical. Recently, I’ve been stress-testing a Python code testing agent - specifically tasked with automated test case generation and execution using pytest as part of our agentic orchestration pilot project.

The question was simple - in a realistic, autonomous testing scenario, does the "smarter" model justify the cost, or is the efficient model "good enough?

The results were genuinely surprising.

The Setup

We tasked two of Anthropic’s leading models - Sonnet 4.5 (released on September 29th, 2025) and the newly released Opus 4.6 (February 5th, 2026) with generating unit tests for a reference class containing various math-related functions.

To ensure a fair baseline comparison, I used simple prompting without extensive chain-of-thought engineering to dictate the testing approach, as I wanted to see what the models would do "out of the box."

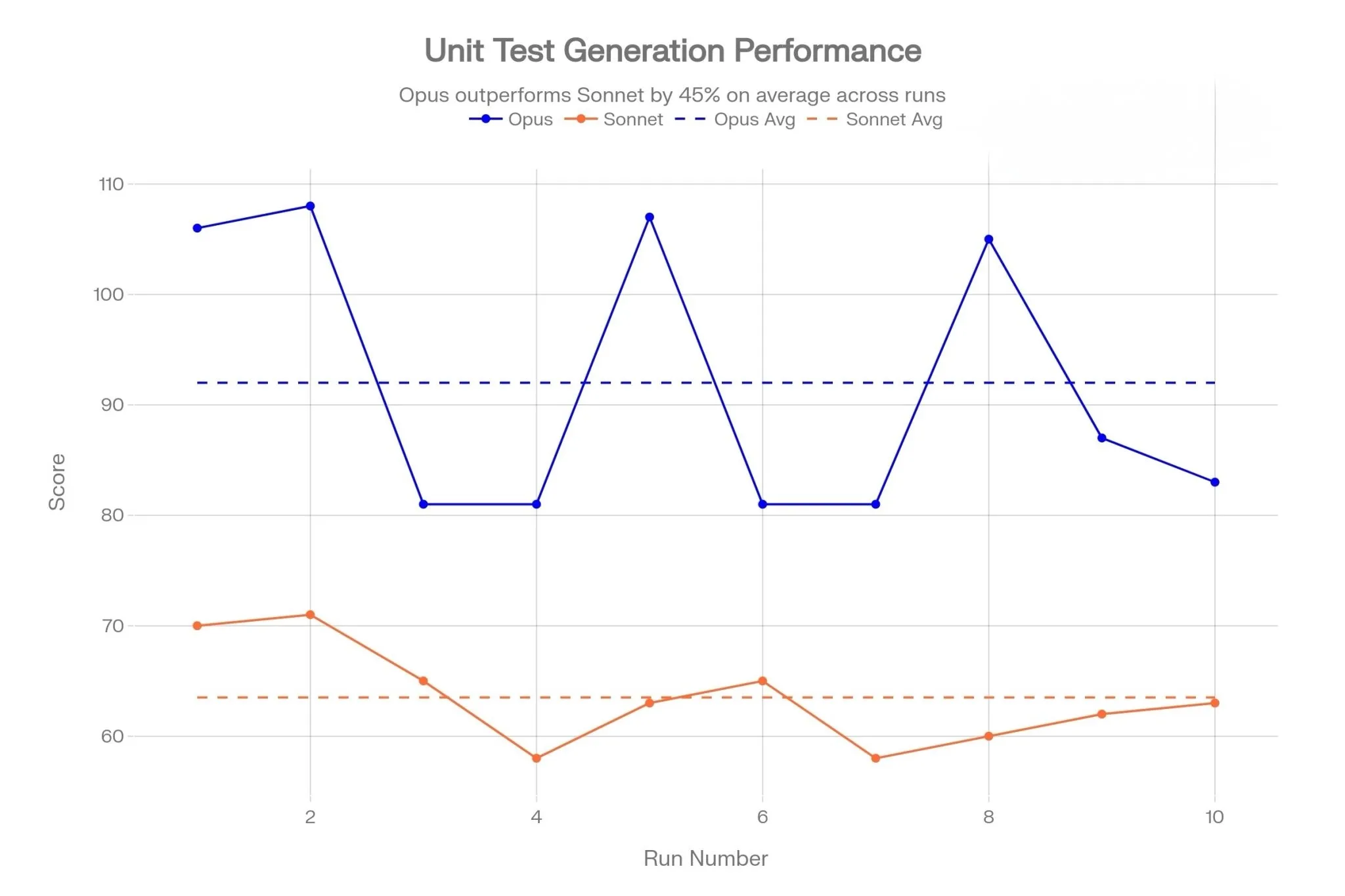

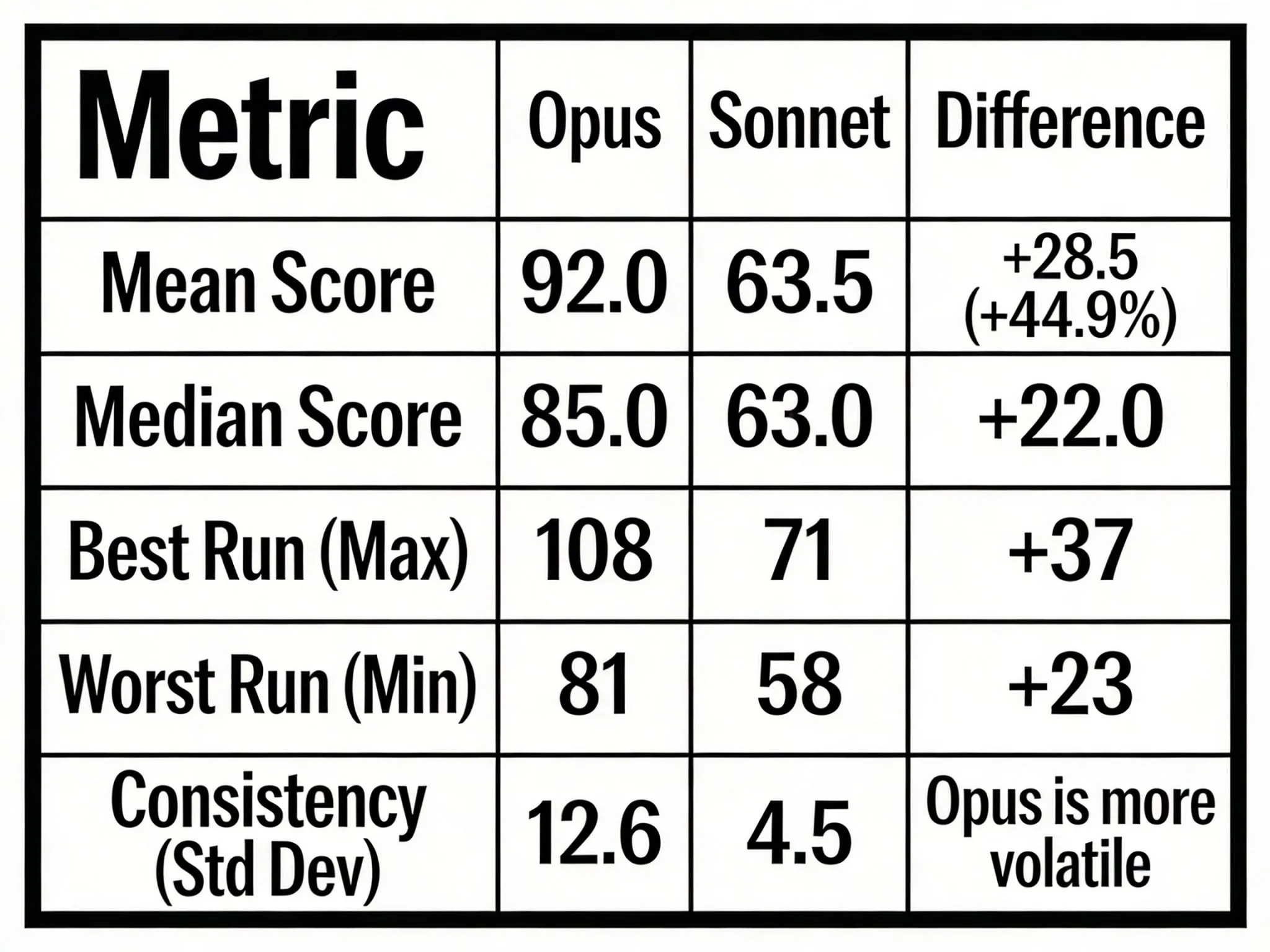

The Results: A 45% Performance Gap

The performance delta was massive:

- Sonnet 4.5 generated an average of 63 test cases.

- Opus 4.6 generated an average of 92 test cases.

This represents a huge difference on a routine task for 2026. However, the raw numbers only tell half the story. When I dug into the code generated and the execution logs, three key insights emerged.

Key Observations

1. The "Moodiness" of Opus is Actually a Feature (well, sort of)

At first glance, Opus appears more volatile, with a 13.7% variance across runs compared to Sonnet’s tight 7.0%. In traditional software engineering, volatility is usually bad. Here, it was a sign of (sporadically applied) intelligence.

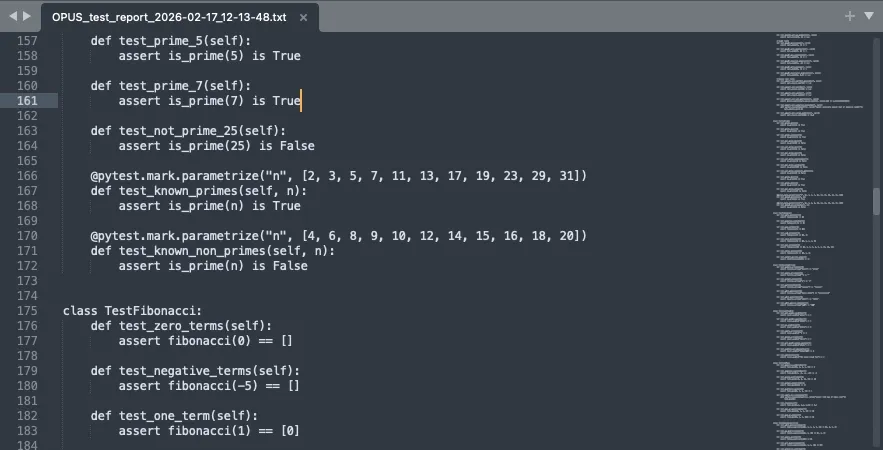

Opus understands how to use more advanced testing features like @pytest.mark.parametrize to efficiently cover dozens of edge cases in a single sweep. When it "decides" to use this approach, its test count increases significantly. When it defaults to standard methods (feeling lazy?) , its score drops closer to Sonnet’s levels but crucially, it still outperforms it in every run.

2. Stability vs. Quality

Sonnet is rather consistent, but the analysis showed that it is "consistently average." It routinely skipped edge cases and boundary conditions, even on simplistic and predictable reference code I used. It prioritized a simple, happy-path approach that resulted in clean code but lower coverage.

3. The Floor vs. The Ceiling

Perhaps the most damning metric for the cheaper model was the comparison of their extremes:

- Sonnet’s Best Run: 71 tests generated.

- Opus’s Worst Run: 81 tests generated.

Even on its worst day, the larger model outperformed the smaller model’s best attempt.

Context Matters

Overall, Opus 4.6 proved significantly better for thorough Unit Testing. While Sonnet is "stable" and substantially cheaper, that stability comes at the cost of missing edge cases and input validations, risks that are unacceptable in ISO 9001-certified Production environments.

For agentic testing workflows, particularly for critical systems in the embedded sector where quality is a must-have, Opus is the clear winner. However, its lack of determinism remains a major concern for orchestration pipelines that require predictable high quality outputs.

Interested in Agentic Orchestration?

We are actively benchmarking and building the next generation of AI-driven development tools.

If you are interested in accessing the complete test dataset or learning more about Exaud’s solutions for agent orchestration, we welcome technical discussions and collaboration opportunities.

Contact our team to request additional details about the benchmarking methodology, dataset, or our broader approach to agentic system design.

Related Posts

Subscribe for Authentic Insights & Updates

We're not here to fill your inbox with generic tech news. Our newsletter delivers genuine insights from our team, along with the latest company updates.